Lorenzo Alberton

« Articles

On batching vs. latency, and jobqueue models

Abstract: A little journey exploring job queue models and debunking some programming folklore around the effects of batching on latency.

Last week at work, as part of an ongoing effort to constantly improve performances and scalability of our platform, I started investigating one of our oldest pieces of software infrastructure, i.e. the job queue framework we use for work distribution in our main service tasks.

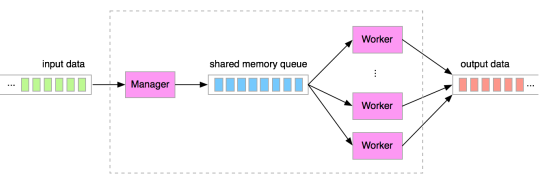

The basic structure is more or less this:

i.e. a Manager process that controls workers (their status and cardinality), fetches data in large chunks from upstream and feeds worker processes waiting for work with a smaller chunk of data each. A shared memory segment is used as internal buffer. Each worker can process data in parallel before sending results downstream and becoming free to accept more work again.

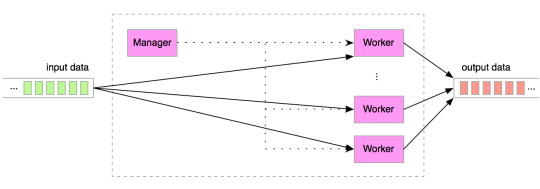

After briefly discussing this with a colleague (did I ever mention we work with really smart people?), we agreed that a much more efficient approach would be to let the workers connect to the data provider directly, leaving the manager with the task of controlling status and cardinality of the workers, but stripping it of the responsibility of work distribution. As it often happens when optimising software, the new design was simpler (fewer moving parts):

To me and a few other colleagues, the potential improvements were fairly obvious, but I was surprised to hear some strong arguments against this approach from other experienced programmers. The counter-arguments sounded solid, but weren't substantiated by actual numbers, highlighting how we often believe (and are willing to defend!) things without verifying our assumptions.

So let me debunk these assertions :-)

Remark #1: accessing a shared-memory queue is orders of magnitudes faster than anything else

Accessing memory is indeed usually fast, but how memory is accessed is important too, and in this specific case the argument doesn't hold for several reasons:

- to enqueue/dequeue items in the shared memory buffer, thousands of system calls per second are actually required, which incur in a big CPU penalty

- however fast, it's an extra hop

- although fetching items from the provider happens (ideally) in large batches, the items are processed serially when inserting and removing from the buffer, and the workers are served serially too

- each item is added and removed from the CPU cache at least twice

- the contention on the data provider is not removed, alas merely moved downstream to another queue

Remark #2: Batching increases latency

"Now that many workers have direct and concurrent access to the source, to avoid hitting it too often, each worker must request a larger batch, but that means introducing a higher latency - it's better to increase the number of workers and let them process fewer items each in parallel".

This is a sensible objection, and yet why can it be wrong under many circumstances? Two reasons:

- unless the work is I/O-bound, having more workers than CPU cores only increases contention and context-switching in the OS' scheduler; a single process/thread per CPU core is the best configuration to maximise CPU efficiency

- batching improves throughput and actually decreases latency. Explaining this requires a little theory. There are two kinds of batching:

- you can wait for the batch to fill up or a timeout to occur, and then ship it; example: Nagle's algorithm. Performances of this approach are not much better than serial delivery. This adds latency.

- you can ship the first item that's available, process it, then come back and take all the data that was produced in the meantime, and keep looping that way.

Applying Little's Law to an example, if you need to send 10 messages to a device with 100µs latency, a serial delivery would take 1ms, whilst, assuming it ships one item first, and then the other 9 in another batch, batching strategy #2 would have an average latency of at most 190µs (although on average 5 items will be available for each delivery, resulting in an average latency of only 150µs). Batching can decrease latency.

I do however concede that the original statement might be true if a) the task is NOT CPU-bound and b) after fetching a batch, the items are processed serially within a single worker, when another worker might be have been idle and able to process some items (on an idle CPU core).

Remark #3: Prefetching a large batch of items speeds up work distribution

The main idea behind the original job queue was to minimise the number of requests to the data provider, by fetching data in large chunks, and have each worker consume a smaller batch each, from a fast memory buffer. Now, ignoring the fact that modern data sources can happily sustain 100K+ connections per second (making the need for one single collector go away), this remark is based on the assumption that the internal queue is always half full, and the Manager process is actually reading large chunks all the time.

Reality is, queues are on average either completely full or empty. In fact, it's very difficult to have a perfect ideal balance between production and consumption rates. You'd rather hope consumption always outpaces production (or you step into the nasty business of unbounded queues).

So assuming we can always keep up with the producer, once the worker is done, it will either find very few new items available in the queue, or none at all. The Manager at this point would no longer fetch large chunks from upstream, but batches similar to the ones processed by each worker, effectively losing the economy of scale and becoming a pointless intermediary.

With each worker having direct access to the source, there's still the problem of stampedes when many of them are free; you can avoid trashing CPU and the producer by having blocking reads (preferred) or, lacking the first option, by backing off for a few ms when there's no work to do. In this situation, the number of requests to the producer shouldn't be very different from the one-reader scenario we started with.

There are other notes regarding queue theory (push vs pull) I'd like to add, but I'll leave them for another blog post.

Results

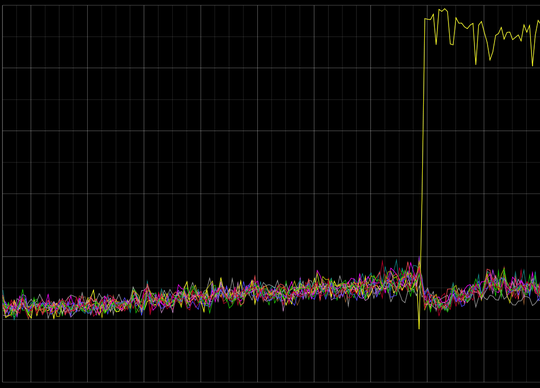

To make a long story short, I spent a couple of hours simplifying the original job queue for a few tasks, and implementing the second strategy, with results that speak for themselves: much better use of all CPU cores, decreased load, decreased latency and, best of all, given it's no longer artificially throttled, a single node can now take 5 times more traffic, with fewer workers:

Conclusion

I wanted to share this story to explain why having a good understanding of CPU and memory models and a little queue theory is important, and to show an example of the sort of challenges we face daily at DataSift (if this is what motivates you and want to work with us, ping me).

The juxtaposition of two platform alerts just blew my mind, We measure platform traffic in tens of gigabits and our latency in µseconds.

— Gareth Llewellyn (@NetworkString) November 9, 2012

I was also inspired by a great talk on programming folklore of one of my favourite engineers, Martin Thompson of LMAX fame: never miss his talks, they're all excellent.

My take-away lessons: be careful with assumptions (at least, re-evaluate them often!), measure everything, avoid complexity.

Take care!

Latest articles

- On batching vs. latency, and jobqueue models

- Updated Kafka PHP client library

- Musings on some technical papers I read this weekend: Google Dremel, NoSQL comparison, Gossip Protocols

- Historical Twitter access - A journey into optimising Hadoop jobs

- Kafka proposed as Apache incubator project

- NoSQL Databases: What, When and Why (PHPUK2011)

- PHPNW10 slides and new job!

Filter articles by topic

AJAX, Apache, Book Review, Charset, Cheat Sheet, Data structures, Database, Firebird SQL, Hadoop, Imagick, INFORMATION_SCHEMA, JavaScript, Kafka, Linux, Message Queues, mod_rewrite, Monitoring, MySQL, NoSQL, Oracle, PDO, PEAR, Performance, PHP, PostgreSQL, Profiling, Scalability, Security, SPL, SQL Server, SQLite, Testing, Tutorial, TYPO3, Windows, Zend FrameworkFollow @lorenzoalberton